AI Chips: A Guide to Cost-efficient AI Training & Inference in 2024

In last decade, machine learning, especially deep neural networks have played a critical role in the emergence of commercial AI applications. Deep neural networks were successfully implemented in early 2010s thanks to the increased computational capacity of modern computing hardware. AI hardware is a new generation of hardware custom built for machine learning applications.

As the artificial intelligence and its applications become more widespread, the race to develop cheaper and faster chips is likely to accelerate among tech giants. Companies can either rent these hardware on the cloud from cloud service providers like Amazon AWS’ Sagemaker service or buy their hardware. Own hardware can result in lower costs if utilization can be kept high. If not, companies are better off relying on the cloud vendors.

What are AI chips?

AI chips (also called AI hardware or AI accelerator) are specially designed accelerators for artificial neural network (ANN) based applications. Most commercial ANN applications are deep learning applications.

ANN is a subfield of artificial intelligence. ANN is a machine learning approach inspired by the human brain. It includes layers of artificial neurons which are mathetical functions inspired by how human neurons work. ANNs can be built as deep networks with multiple layers. Machine learning applications using such networks is called deep learning. Deep learning has 2 main use cases:

- Training: A deep ANN is fed thousands of labeled data so it can identify patterns. Training is time consuming and intensive for computing resources

- Inference: As a result of the training process, ANN is able to make predictions based on new inputs.

Although general purpose chips can also run ANN applications, they are not the most effective solution for these software. There are multiple types of AI chips as customization is necessary in different types of ANN applications. For example, in some IoT applications where IoT devices need to operate on a battery, AI chips would need to be physically small and built to function efficiently with low power. This leads chip manufacturers to make different architectural choices while designing chips for different applications

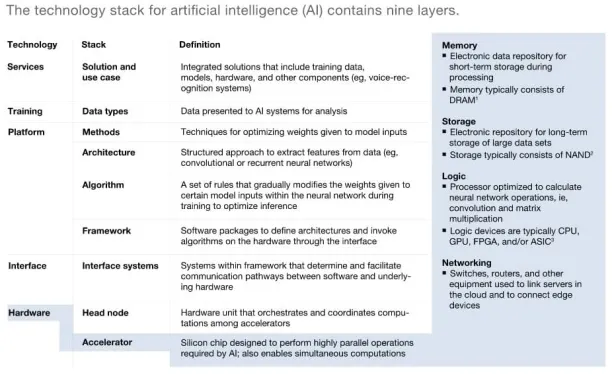

What are AI chip’s components?

The hardware infrastructure of an AI chip consists of three parts: computing, storage and networking. While computing or processing speed have been developing rapidly in recent years, it seems like some more time is needed for storage and networking performance upgrades. Hardware giants like Intel, IBM, Nvidia are competing to improve the storage and networking modules of the hardware infrastructure.

Why are AI chips higher performing than general purpose chips?

General purpose hardware uses arithmetic blocks for basic in-memory calculations. The serial processing does not give sufficient performance for deep learning techniques.

- Neural networks need many parallel/simple arithmetic operations

- Powerful general purpose chips can not support a high number of simple, simultaneous operations

- AI optimized HW includes numerous less powerful chips which enables parallel processing

The AI accelerators bring the following advantages over using general purpose hardware:

- Faster computation: Artificial intelligence applications typically require parallel computational capabilities in order to run sophisticated training models and algorithms. AI hardware provides more parallel processing capability that is estimated to have up to 10 times more competing power in ANN applications compared to traditional semiconductor devices at similar price points.

- High bandwidth memory: Specialized AI hardware is estimated to allocate 4-5 times more bandwidth than traditional chips. This is necessary because due to the need for parallel processing, AI applications require significantly more bandwidth between processors for efficient performance.

Why is AI chip important now?

Deep neural network powered solutions make up most commercial AI applications. The number and importance of these applications have been growing strongly since 2010s and are expected to keep on growing at a similar pace. For example, McKinsey predicts AI applications to generate $4-6 trillions of value annually.

Another recent Mckinsey research states that AI-associated semiconductors will see approximately 18 percent growth annually over the next few years. This is five times more than the growth of semiconductors used in non-AI applications. The same research states that AI hardware will be estimated to become a $67 billion market in revenue.

What are AI chip design approaches?

AI chips use novel architectures for improved performance. We sorted them some of these approaches from most commonplace to emerging approaches:

- GPUs: Graphical Processing Units were originally designed for accelerating graphical processing via parallel computing. The same approach is also effective at training deep learning applications and is currently one of the most common hardware used by deep learning software developers.

- Wafer chips: For example Cerebras is building wafer chips by producing a 46,225 square millimeter (~72 square inch) silicon wafers containing 1.2 trillion transistors on a single chip. Thanks to its high volume, the chip has 400,000 processing cores on it. Such large chips exhibit economies of scale but present novel materials science and physics challenges.

- Reconfigurable neural processing unit (NPU): The architecture provides parallel computing and pooling to increase overall performance. It is specialized in Convolution Neural Network (CNN) applications which are a popular architecture for Artificial Neural Networks (ANNs) in image recognition. San Diego and Taipei based low power edge AI startup Kneron licences the architecture on which their chips are based; a reconfigurable neural processing unit (NPU). The fact that this architecture can be reconfigured to switch between models in real time allows creating an optimized hardware depending on the needs of the application. US National Institute of Standards and Technology (NIST) recognized Kneron’s facial recognition model as the best performing model under 100 MB.

- Neuromorphic chip architectures: These are an attempt to mimic brain cells using novel approaches from adjacent fields such as materials science and neuroscience. These chips can have an advantage in terms of speed and efficiency on training neural networks. Intel has been producing such chips for the research community since 2017 under the names Loihi and Pohoiki.

- Analog memory-based technologies: Digital systems built on 0’s and 1’s dominate today’s computing world. However, analog techniques contain signals that are constantly variable and have no specific ranges. IBM research team demonstrated that large arrays of analog memory devices achieve similar levels of accuracy as GPUs in deep learning applications.

What are the important criteria in assessing AI Hardware?

The needs of the team are the most important criteria. If your team can rely on cloud providers, solutions such as AWS Sagemaker enable teams to experiment with model training quickly by scaling their software to run on numerous GPUs. However, this comes with higher costs compared to on-premise models. Therefore cloud can be a great platform for initial testing but may not be appropriate for a large team building a mature application that will provide high utilization for the company’s own AI hardware.

Once you decide that your company needs to buy its own AI chips, these are the important characteristics of chips to be used in an assessment:

- Processing speed: AI hardware enables faster training and inference using neural networks.

- Faster training enables the machine learning engineers to try different deep learning approaches or on optimizing the structure of their neural network (hyperparameter optimization)

- Faster inferences (e.g. predictions) are critical for applications like autonomous driving

- Development platforms: Building applications on a standalone chip is challenging as the chip needs to be supported by other hardware and software for developers to build applications on them using high level programming languages. An AI accelerator which lacks a development board would make this device challenging to use in the beginning and difficult to benchmark.

- Power requirements: Chips that will function on battery need to be able to work with limited power consumption to maximize device lifetime.

- Size: In IoT applications, device size may be important in applications like mobile phones or small devices.

- Cost: As always, Total Cost of Ownership of the device is critical for any procurement decision.

Are there AI hardware benchmarks?

An objective performance benchmark of an AI hardware on deep learning application is difficult to obtain. The existing benchmarks tend to compare two different AI hardware in terms of speed and power consumption.

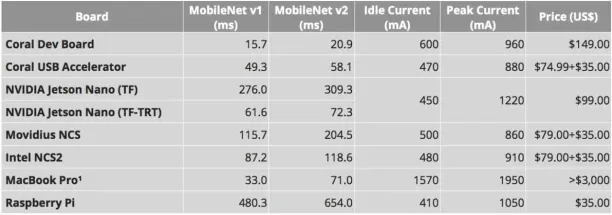

Here is a medium article which compares several AI hardware with both an apple computer and some developments boards.

This benchmark compares several devices with simple computer and a Macbook Pro. Two first six rows are different AI hardware. While the performance of Macbook is better than some AI accelerators, its power consumption and price make it prohibitively expensive.

It is difficult to find an objective benchmark for a general deep learning usage. Both cloud and on-premise AI hardware users are advised to first benchmark these systems with their own applications to understand their performance. While benchmarking cloud services is relatively easy, benchmarking own hardware can be more time consuming. If this is a commonly found AI hardware, companies can find it on a cloud service and benchmark its performance as some cloud services openly share the underlying hardware specs. If such a test can not be run on the cloud, sample hardware would need to be requested from the supplier for testing.

What are the leading providers for AI Hardware?

The technical complexity of producing a working semiconductor device does not allow startups or small teams to build AI hardware. This is why hardware tech leaders dominate AI hardware industry. According to Forbes, even Intel with numerous world class engineers and a strong research background, needed 3 years of work to build the Nervana neural network processor.

We have prepared a comprehensive, sortable list of companies working on AI chips. You can also see some of the companies working on AI hardware below:

- Advanced Micro Devices(AMD)

- Apple

- Arm

- Baidu

- Google(Alphabet)

- Graphcore

- Huawei

- IBM

- Intel

- Microsoft

- Nvidia

- Texas instruments

- Qualcomm

- Xilinx

For in-depth analysis regarding AI chip makers you can read our Top 10 AI Chip Makers: In-depth Guide article.

Also, don’t forget to read our article on TinyML.

If you have questions about how ai hardware can help your business, we can help:

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

Comments

Your email address will not be published. All fields are required.