Measuring AI Advancement Through Games in 2024

When we talk about how far Artificial Intelligence has come, we often use numbers to make our point. We build upon the previous work, and if we have done our job well, we end up taking an incremental step towards the future. However, what changes our perception is seeing what our steps allow us to accomplish.

This week, just after Elon Musk’s OpenAI beat a professional human player at Dota 2 – a widely successful computer game that is also played as a competitive e-sport – we bring you two decades of advancement in Artificial Intelligence, and how it is slowly but surely conquering competitive games.

Chess

May 11, 1997. The world holds its breath as the IBM supercomputer Deep Blue, the computer designed to be the best chess player in the world, finally beats the world chess champion, Garry Kasparov in a match. It is unprecedented. The news spread like wildfire. The machine beats the man.

That might sound like a lot of exaggeration, but a machine being able to beat a human in a strategy game was unprecedented. Looking back, Deep Blue’s main strength was its computing power. It used a brute-force approach, calculating all the possible futures it could given the time limit, and chose the moves that would lead to the best end-game positions. It could search 200 million positions per second, which generally translates to 6-8 moves into the future, and was among the 500 most powerful supercomputers. It was a show of strength, a feat no human could replicate.

Chess AI nowadays uses more sophisticated algorithms and do not need a supercomputer to run.

Jeopardy

The television quiz game show, Jeopardy, was the next game to crown a machine champion. In 2011, IBM’s Watson supercomputer defeated two former Jeopardy champions. The development on it was started back in 2006, and within 4 years, Watson was capable of beating humans consistently.

By far the most significant achievement of Watson was its ability to take the clues provided by the game, phrased in English, and consult its knowledge-base (also in English) for the right answer. Natural languages such as English are, while relatively simple to use, notoriously hard for computers to understand. Simply put, computers lack the common sense required to make sense of the sentences we use. Through its vast knowledge-base, which famously includes all the published Wikipedia pages, and through algorithms designed to make sense of natural languages, Watson found the right answers enough times to beat its competitors.

Atari

Machine Learning began to pick-up in the 2010s, primarily due to advancements in Computer Vision. In 2012, leading researchers in Machine Learning demonstrated that computers could reliably classify thousands of different objects in an image. Two short years later, in 2014, Google researchers uncovered a way to use the pixels displayed by an Atari machine to learn how to play over 40 games.

The Artificial Intelligence methods used by the researchers, called Reinforcement Learning, changed the landscape of using machine intelligence to play games. The bots were able to constantly improve themselves by trial and error. It is somewhat analogous to training your dog to give you a high-five: if the dog performs what you want correctly, give it a treat. It will take some time to understand why you sometimes give it treats and why you sometimes don’t, but with enough trials, it will learn.

By simply telling bots the current state of the game, their score, and that they should improve their scores, the scientists taught bots to play games.

Pacman

Though most Atari games were conquered by AI, Pacman proved elusive due to its unpredictable nature. That didn’t last long though. Microsoft Maluuba researchers developed a multi-agent reinforcement learning model to achieve the top score in the game, a feat not achieved by humans before.

In Maluuba’s learning model, different agents track different goals with different payouts. An agent tracks only a single pellet, fruit, ghost or edible ghost. For example, since ghosts can end the game by catching Pacman, the importance of recommendations of ghost-tracking agents is of higher importance than other agents.

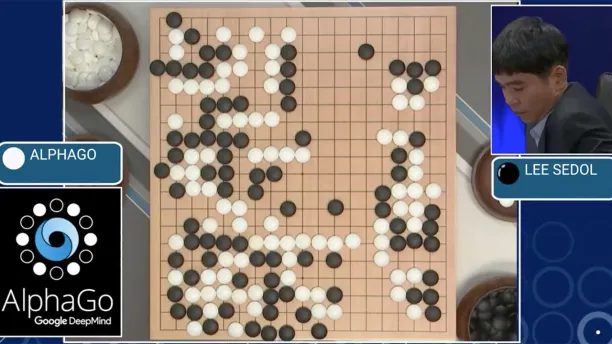

Go

While Deep Blue was a milestone, there comes a point where calculating all possible outcomes becomes infeasible. Such was the case with the ancient board game of Go, a strategy game that has a much larger move pool than chess. Up until 2015, the best bots were only able to defeat amateur players, unable to use viable long-term strategies. That was, of course, before all the computer Go records were shattered by Google DeepMind’s AlphaGo. In March 2016, it handily beat Lee Sedol, one of the highest ranked Go players in the world, and in May 2017, beat the highest ranking Go player in the world, Ke Jie.

AlphaGo’s success was again enabled by Reinforcement Learning. It was trained by a vast number of games, from which it learned the moves that most frequently led to victories. By that point, AlphaGo was already on the caliber of a low-ranking professional player. The secret to its success was, however, the matches it played against itself. By changing its playstyle bit by a bit on each iteration, and picking the playstyle that results in a win, it improved itself to be the best on the planet.

However, top go programs are not immune to being defeated by humans. An amateur studied and defeated such a program in 2023.

Texas Hold’em Poker

Earlier this year, an AI built by researchers at Carnegie Mellon University, called Libratus, beat four other pros in a competitive tournament of No-Limit Texas Hold’em. This is a version of Hold’em where there is no betting limit, and is one of the most popular card games. AI had previously solved Limit Hold’em in 2015.

In games like Go and Chess, you have full knowledge of the state of the game at all times. Unlike those, Texas Hold’em is an imperfect information game, where you do not know the full state of the game. This makes things much harder for AI, as it has to understand which cards its opponent has at hand, and be able to see through bluffing.

Libratus walked away with (fake) chips worth $1.8 million after beating all of the pros to the negatives. It only played, however, with a single opponent at a time. This significantly limits the number of possibilities the AI has to deal with, but Libratus is still a tremendous achievement.

Dota 2

During its latest attempts in 2018, OpenAI’s Dota bot was able to defeat talented human players but lost to human champions after competing on fair terms with humans. Initially, the bot and its opponents were able to pick only a few of all the characters in the game, making it simpler.

In a previous attempt, the AI system developed by OpenAI tried its hand on Dota 2 (though a simple form of it), and was able to beat many of the professional players it matched against. Dota 2 is traditionally a 5v5 game with a lot of different variables, and the bot only competed on a very small part of it. We should also note that, unlike the previous examples, the bot was defeated throughout the day.

What OpenAI achieved is still a major achievement, because the environment in which the AI must work is vastly different from the previous games. The previously mentioned games we mentioned were all turn-based, where the moves are made one at a time, and the result of a move is immediately visible. Dota 2, however, is real-time.

In real-time games, you are constantly making decisions on what to do. Even not doing anything becomes a decision. Given these circumstances, you cannot afford to wait to think about your next move. The possibilities available to you change every second, and thinking about your next move for longer than an instant usually means your loss. By the time you act, that possibility may have passed already.

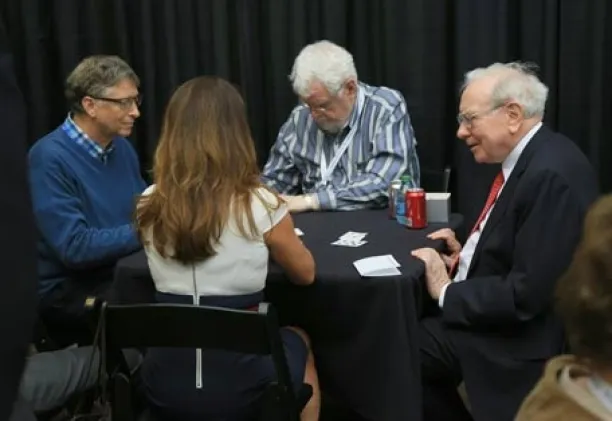

A popular game yet to be conquered: Bridge

Bridge is one of the most popular card games, especially among seniors. Given Warren Buffet’s and Bill Gates’ fascination with the sport, we can say it is also popular with the ultra-rich.

Berkshire Hathaway Chairman and CEO Warren Buffett, right, plays bridge opposite of Microsoft co-founder Bill Gates, left, outside the Borsheims jewelry store, a Berkshire Hathaway subsidiary, in Omaha, Neb., Sunday, May 3, 2015. The annual Berkshire shareholders weekend is coming to a close one day after more than 40,000 people attended a question and answer session with Buffett and Vice Chairman Charlie Munger. (AP Photo/Nati Harnik)

Despite advances in the field, no bridge-playing AI has beaten world champions repeatedly yet. This is possibly since bridge is a game based on incomplete information and that bridge attracted relatively less research interest compared to more popular games like chess or Go. An interesting characteristic of bridge is that the question “Is the problem of deciding the winner in double-dummy bridge hard in any complexity class?” is an unresolved problem in computer science.

Super Mario

Playing games is one thing, creating games is another. A team from Georgia Institute of Technology published a research paper describing their AI system that can recreate classic titles like Super Mario just by watching the game, without accessing its code.

Future

Given the current landscape, we can only assume that real-time games will start to be tackled by many different researchers. A big part of the challenges of AI is learning what can be done. Now that we know artificial agents that act in real-time, we will see a surge in the attempts to create bots that can do well in those games.

Late last year, OpenAI unveiled Universe, a software platform for training AI agents in a collection of 1000+ environments, including games, webpages, and computer applications. OpenAI wants it to be the new playground for training artificial agents, by making it easy to apply your technique from one game to the other. Universe has not made a significant impact in the Machine Learning community, but OpenAI’s success with Dota 2 is bound to increase the interest.

Today, one game in particular is currently being pushed to the spotlight, by none other than the famed game developer Blizzard and Google DeepMind. That game is StarCraft II, a strategy game that frequently requires you to manage hundreds of little units to achieve an end goal. These two companies are releasing a toolkit for connecting with the game and a large number of replays, for you to train your bots on. We should not expect for StarCraft II to be “solved” for some time, but we can never know.

We are taking the step to reach the next big thing. No one knows how many steps it will take, but one thing is for sure: we will get there.

In case you are more interested in business applications of AI, you can check out AI applications in marketing, sales, customer service, IT, data, or analytics. And if you have a business problem that is not addressed here:

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

![Insight Engines: How it works, Why it matters & Use Cases[2024]](https://research.aimultiple.com/wp-content/uploads/2020/05/Insight-Engines-Featured-Image-190x66.jpg.webp)

Comments

Your email address will not be published. All fields are required.