Ultimate Guide to the State of AI Technology in 2024

AI technology can be divided into 3 layers. While we will likely see incremental improvements in algorithm design and application of those algorithms to specific domains, step change is possible in computing if breakthroughs can be achieved in quantum computing.

- Algorithms that enable machine decision making including artificial neural networks (ANN), Bayesian inference and evolutionary computing. ANNs can be categorized by their depth (i.e. number of layers) and structure (i.e. how nodes are connected). Most recent progress in AI was due to deep neural networks (also called deep learning).

- Computing technology to run those algorithms: Computing is key in AI, advances in computing power enabled the wave of AI commercialization thanks to deep learning since the 2010s.

- Application of those algorithms to specific domains including reinforcement learning, transfer learning, computer vision, machine vision, natural language processing (NLP), recommendation systems.

AI Algorithms

Algorithms are like recipes for AI systems to learn from data or to make decisions. Advances in algorithm design are key for advancement for AI.

Artificial Neural Networks (ANNs)

The neural network is a popular machine learning technique that is inspired by the human brain and the neural network in our brains.

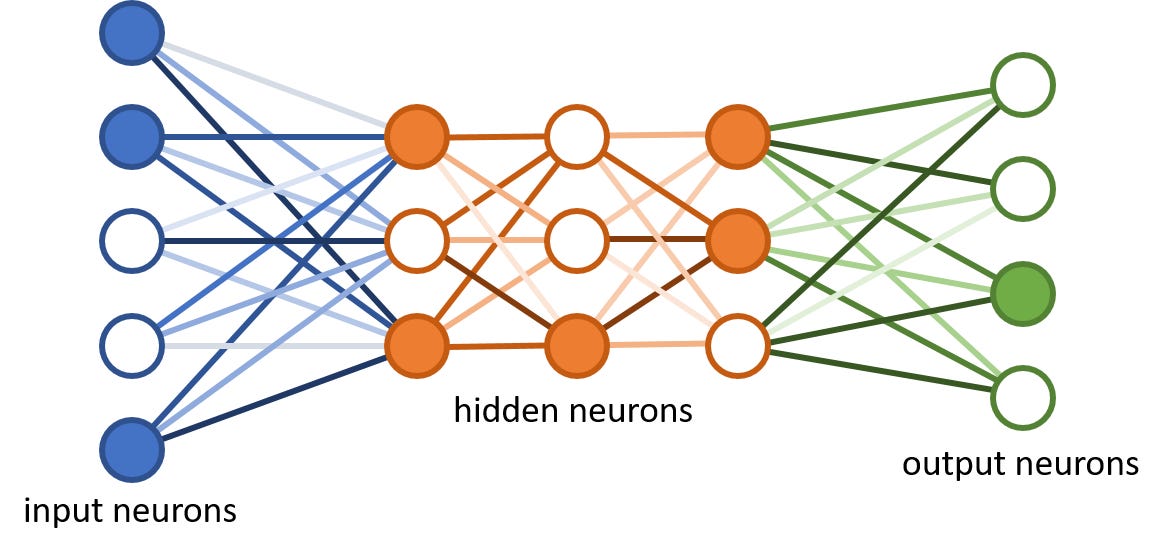

These networks consist of interconnected artificial neurons. Every neuron processes the input data with a predefined mathematical function and produces and output which becomes the input for other neurons. An ANN can be divided between the input, hidden and output layers. With the given input data, the coefficients between neurons are computed to achieve accurate outputs at the end. You can learn more about how neural networks work in our related article.

Geoffrey Hinton, one of the pioneers in deep learning explained artificial neural networks in quite understandable terms during a recent interview:

You have relatively simple processing elements that are very loosely models of neurons. They have connections coming in, each connection has a weight on it, and that weight can be changed through learning. And what a neuron does is take the activities on the connections times the weights, adds them all up, and then decides whether to send an output. If it gets a big enough sum, it sends an output. If the sum is negative, it doesn’t send anything. That’s about it. And all you have to do is just wire up a gazillion of those with a gazillion squared weights, and just figure out how to change the weights, and it’ll do anything. It’s just a question of how you change the weights.

Data scientists use this technology in decision-making, improved forecasting, image recognition, and robotics.

ANNs can be deep or shallow and can have different structures

ANNs by depth

Shallow networks, which were the focus of significant research in 50s and 60s could not learn complex tasks. For example, the book Perceptron by Marvin Minsky and Seymour Papert famously proved that a single layer of perceptrons (neurons) could not even learn simple logical functions like XOR. At the time, machines were not powerful enough to train deep networks and this finding reduced research focus on shallow ANNs.

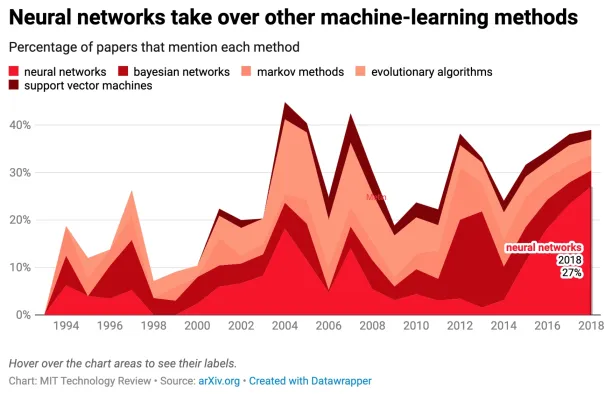

In 2010s, with the availability of cheap computing power and GPUs, scientists were able to train deep networks in reasonable time frames. Since then, research in AI has focused on deep ANNs (i.e. deep learning). As seen in the below image, neural networks have become the most popular topic of research under AI since 2015.

ANNs by structure

Deep networks can be built in various architectures. Below, we explain some of the most popular/promising architectures.

Recurrent Neural Networks

Recurrent neural networks (RNNs) are recursive neural networks (i.e. networks where same set of weights are applied recursively to inputs) built to operate with a sequence of inputs. Recurrent neural networks outperform other current approaches especially in Natural Language Processing (NLP) tasks where prediction depends on previous inputs. For example, Android started relying on them by 2015 for text-to-speech synthesis. Feel free to check out Andrej Karpathy’s , Sr. Director of AI at Tesla, article on RNNs applied to various tasks from writing Shakespeare style articles to Linux source code.

Convolutional Networks

Have you ever thought about how Facebook accurately suggests to tag someone in your photo? How does Facebook detect that specific person in the photo? Another network architecture, a convolutional neural network, is the idea behind this image recognition example. Convolution is a specialized linear operation. Convolutional networks are neural networks that use convolution in place of general matrix multiplication in at least one of their layers.

Capsule Networks

Capsule networks aim to mimic the human brain more closely. These networks consist of capsules and, each capsule includes a set of neurons. Each neuron in the capsule handles a specific feature while capsules work simultaneously. This enables capsule networks to do tasks in parallel.

A popular example of capsule networks is face recognition. Capsule networks are built to hold inner information in memory. For example, capsule networks are superior to other current popular approaches in distinguishing examples like the two below images.

Geoffrey Hinton, one of the pioneers of deep learning, states that capsule networks cuts error rates by 45% compared to previous AI algorithms.

Evolutionary Algorithms

An evolutionary algorithm is a heuristic approach that leverages the concept of natural selection to give the expected results in time. It can be used for challenging problems that would take too long to process exhaustively. Today, they are often used in combination with other methods, by acting as a quick way to find a suitable starting point for another algorithm. Here is how evolutionary algorithms work in short:

- Initialization: Start with a population of solutions.

- Selection: Choose the ones that perform the best results.

- Crossover: Mix these solutions to create new solutions.

- Mutation: Introduce new material to new solutions. If you skip this step, you will reach local extrema too quickly without achieving the optimal solution.

- Re-selection: Choose the best-performing solutions between the new generation of solutions and repeat crossover and mutation steps.

- Termination: End the algorithm if you reach the maximum runtime or a predefined level of performance.

To illustrate how evolutionary algorithms work, here is an example of walking dinosaurs from different generations of solutions.

Computing Technology

AI requires computing power for learning. Without powerful computing technologies, AI agents may not give intended results for businesses. Thus, developers aim to create stronger computing technologies to improve AI performance. To do that, they may construct new technologies or develop current hardware that can handle more complicated jobs. Unlike the advances in AI algorithms and their applications, computational power is quantifiable, providing us a chance to measure AI progress.

Computing power used in model training is increasing

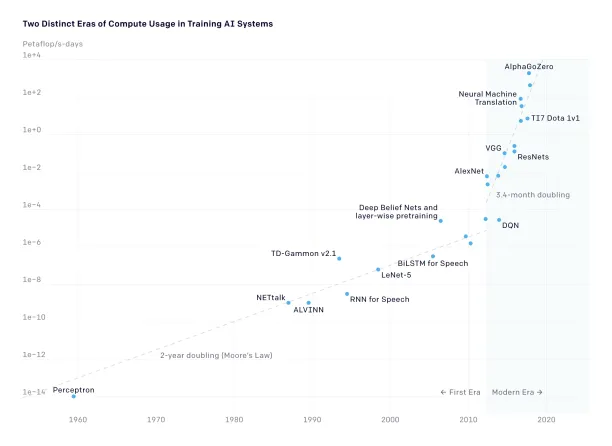

According to an analysis published by OpenAI in 2018, the required computing power for training AI systems used to follow Moore’s Law; it used to double every two years. However, since 2012, computing power used in the largest AI training runs has increased exponentially with a 3.4-month doubling time.

In other words, new AI advances that require the largest amount of computing power and time (such as Neural Machine Translation and AlphaGoZero) are now progressing faster than Moore’s Law. We expect this situation to limit future AI technologies since the advances in computing power go slower, assuming that Moore’s Law still holds.

In the below figure, you can see that AI systems have grown faster than Moore’s Law since 2012 (Modern Era.) One petaflop/s day consists of 1015 neural network operations per second for one day, or a total of about 1020 operations.

The computing power used in model training increases due to 3 factors and we expect them both to increase at least linearly. However, all of these factors have physical limits so we will see flattening in growth in the long term:

- Computing power per chip

- Number of chips used to train machine learning models

- Time to train models

Focusing on computing power, we see 3 important trends:

Moore’s Law will fall down while new technologies are emerging

Moore’s Law predicts that the number of transistors on a microchip exponentially doubles the cost halves, and the speed also doubles every two years. However, chips are now so tiny that we are about to reach the limit. The nano-dimensions of new transistors will cause costs to increase because scientists would have to deal with “atomic” transistors. An article published in Forbes expects that Moore’s Law will break down in 2022-2025.

Although this limitation of classical chips might cause Moore’s Law to break, new emerging computing technologies (like quantum computing) will maintain or even speed up the advances in computing power. We expect these future technologies to replace Moore’s Law and computing power to double in less than every two years.

Investment to further increase computing power is increasing

Thus, advances in AI hardware and research on AI play critical roles in responding to this demand. In parallel, the research firm CB Insights shares that venture capitalists invested more than $1.5 billion in AI chip start-ups in 2017, nearly doubling the investments made in the previous two years.

Quantum computing could be a game changer

Most of today’s advances mostly enable incremental enhancement. Yet, quantum computing is a game-changer that can bring a step-change.

Here is an example to give you a solid idea: Google announced that their quantum computer had solved a problem in 200 seconds that is the world’s fastest supercomputer 10,000 years. Although IBM later argued that Google had miscalculated the problem’s difficulty, they said it could be solved in 2.5 days instead of 10,000 years by the classical system.

Even when comparing 2.5 days and 200 seconds, we can observe how much computing power potential quantum computing has. AI will require such power to maintain its development, considering how fast it evolves. You can read more on this in our in-depth quantum computing article.

Feel free to read our future of AI article for more on quantum computing and other computing trends relevant for AI.

Application of AI Algorithms to Specific Domains

Reinforcement Learning (RL)

In real life, learning is dynamic. We make experiments, observe results and make new experiments. Reinforcement learning tackles interactive learning environments with AI algorithms. With reinforcement learning, the AI agent interacts with its environment and takes consecutive actions to maximize its gains.

Unlike traditional learning, RL doesn’t look for any patterns to take action. Instead, it generates numerical values as rewards for desired outcomes and makes sequential decisions to maximize its total reward. In this process, AI agents keep exploring and updating their beliefs just as humans learn from their experience in the real world.

The most well-known RL example is Google’s DeepMind AlphaGo, which has defeated the world’s number one Go player Ke Jie in two consecutive games. Similarly, RL is widely used in robotics as it requires goal based exploration as well.

Transfer Learning

Transfer learning enables users to leverage a previously used AI model for a different task. For example, an AI model that is trained for recognizing different cars can be used for trucks. Rather than creating a new model, using a pre-trained model can save a significant amount of time. It is smart to use transfer learning in the following conditions:

- It may take too much time to create a new learning process from scratch

- There may not be enough data to handle that specific task

MIT Technology Review also shares that transfer learning can be used for the social good. Their study shows that a large neural network with 200 million+ parameters trained on a Cloud TPU produces CO2 equivalent to 5 cars during their lifetime. Transfer learning can directly prevent such usage of powerful processing units.

Self-Supervised Learning (Self-Supervision)

Self-supervised learning, also known as self-supervision, is an emerging learning model where training data is labeled autonomously. The model generally tries to take one part of the data as input and predict the other part to train itself and label data accurately. By creating labels for an unlabeled dataset, this technique converts an unsupervised learning problem into a supervised one.

Most popular applications of self-supervised learning remain in computer vision. This learning can be used in image-related tasks like colorization or 3D rotation.

However, today’s self-supervised learning techniques still require significant modifications to train appropriately. Although this learning carries a substantial potential towards artificial general intelligence (AGI) in the future, Google shares that we still haven’t seen widespread adoption of this model yet.

Computer Vision

Computer vision includes the techniques of perceiving and distinguishing images with computers. Improving the image quality, image matching, object recognition, and image reconstruction are all subcategories of computer vision. The main goal of this technology is to make computers understand and be able to implement human visual perception.

The use cases include tumor detection in healthcare, tracking UAVs in the military, emotion detection, and car plate recognition. Unlocking our phones with face recognition is another daily-life example of computer vision.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is concerned with how AI agents perceive human languages. This technology involves text and speech recognition, natural language understanding, generation, and translation.

NLP is currently used in chatbots, cybersecurity, article summarization, instant translation, spam detection, and information extraction.

Social media is a common NLP use case for information extraction. For example, Cambridge Analytica relied on a few pieces of structured data like voters’ likes for its now infamous segmentation of the American voters. Users’ posts contain far more detailed data on those users’ preferences which can be analyzed for better segmentation. This is one of the reasons why data privacy is increasing in importance with more restrictive legislation passed over time.

Recommendation Systems

Recommendation systems aim to predict the users’ future preferences based on their previous information. This information can include general information, photos you like, or items you have purchased. These systems enable users to have personalized experiences and allow them to discover new things that interest them.

The most common examples are Amazon, Netflix, and Spotify which recommends you books, movies, and music based on your usage history.

If you wonder how you can apply AI to mobile applications, you can read this article.

To learn more about how these AI technologies will evolve in the future, feel free to read our article on the future of AI.

You can also check out our list of AI tools and services:

If you still have questions about AI technologies, feel free to contact us:

Cem is the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per Similarweb) including 60% of Fortune 500 every month.

Cem's work has been cited by leading global publications including Business Insider, Forbes, Washington Post, global firms like Deloitte, HPE, NGOs like World Economic Forum and supranational organizations like European Commission. You can see more reputable companies and media that referenced AIMultiple.

Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised enterprises on their technology decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization.

He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider.

Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

Sources:

AIMultiple.com Traffic Analytics, Ranking & Audience, Similarweb.

Why Microsoft, IBM, and Google Are Ramping up Efforts on AI Ethics, Business Insider.

Microsoft invests $1 billion in OpenAI to pursue artificial intelligence that’s smarter than we are, Washington Post.

Data management barriers to AI success, Deloitte.

Empowering AI Leadership: AI C-Suite Toolkit, World Economic Forum.

Science, Research and Innovation Performance of the EU, European Commission.

Public-sector digitization: The trillion-dollar challenge, McKinsey & Company.

Hypatos gets $11.8M for a deep learning approach to document processing, TechCrunch.

We got an exclusive look at the pitch deck AI startup Hypatos used to raise $11 million, Business Insider.

To stay up-to-date on B2B tech & accelerate your enterprise:

Follow on

Comments

Your email address will not be published. All fields are required.